Everything You Know About Latency Is Wrong

Theo Schlossnagle

Theo founded Circonus in 2010, and continues to be its principal architect. He has been architecting, coding, edifice and operating scalable systems for 20 years. Every bit a serial entrepreneur, he has founded four companies and helped grow countless engineering organizations. Theo is the author of Scalable Internet Architectures (Sams), a contributor to Web Operations (O'Reilly) and Seeking SRE (O'Reilly), and a frequent speaker at worldwide IT conferences. He is a member of the IEEE and a Distinguished Member of the ACM.

Every bit more companies transform into service-centric, "always on" environments, they are implementing Site Reliability Engineering (SRE) principles like Service Level Objectives (SLOs). SLOs are an understanding on an acceptable level of availability and performance and are fundamental to helping engineers properly residue take a chance and innovation.

SLOs are typically defined effectually both latencies and error rates. This article will swoop deep into latency-based SLOs. Setting a latency SLO is virtually setting the minimum viable service level that will still evangelize adequate quality to the consumer. It'southward not necessarily the all-time you can do, information technology'due south an objective of what yous intend to deliver. To position yourself for success, this should ever exist the minimum viable objective, so that you lot can more easily accrue error budgets to spend on gamble.

Calculating SLOs correctly requires quite a flake of statistical assay. Unfortunately, when it comes to latency SLOs, the math is almost e'er done wrong. In fact, the vast majority of calculations performed by many of the tools available to SREs are done incorrectly. The consequences for this are huge. Math done incorrectly when computing SLOs tin result in tens of thousands of dollars of unneeded capacity, not to mention the cost of human time.

This article volition delve into how to frame and calculate latency SLOs the correct way. While this is too complex of a topic to tackle in a unmarried article, this data will at least provide a foundation for understanding what Not to do, as well every bit how to approach computing SLOs accurately.

The Wrong Mode: Aggregation of Percentiles

The most misused technique in calculating SLOs is the aggregation of percentiles. You should about never boilerplate percentiles considering fifty-fifty very key aggregation tasks cannot be accommodated by percentile metrics. It is often idea that since percentiles are cheap to obtain by most telemetry systems, and practiced enough to employ with trivial attempt, that they are appropriate for assemblage and system-wide functioning assay most of the time. But unfortunately, you lose the ability to determine when your data is lying to yous — for example, when y'all have high (+/- 5% and greater) error rates that are hidden from you.

The higher up image is a common graph used to measure out server latency distribution. It covers two months, from the starting time of June to the cease of July, and every pixel in here represents one value. The 50th percentile, or average, means that almost half of the requests (those under the blue line) were served faster than almost 140 milliseconds, and most one-half were served slower than 140 milliseconds.

Of course, nigh engineers want 99% of their customers to receive a great experience, non just one-half. So rather than summate an average, or 50th percentile, you start calculating the 99th percentile. Viewing the imperial line at the top, the 99th percentile at each of these points represents still many requests — hundreds, thousands, etc — were served where that pixel is drawn. 99% of the requests below that line were faster, while the ane% of requests above were slower. It's of import to note that y'all don't know how much faster or slower those requests were, so only looking at the percent tops tin be highly misleading.

A disquisitional adding problem here is that each of these pixels on the graph, as yous become forward in time, represents all of the requests that happened in that time slot. Say at that place are 1400 pixels and threescore days represented on the graph — that means each pixel represents all of the requests that happen in an unabridged 60 minutes. Then if you can imagine at the peak on the top right, in that location was a spike in the 99th percentile — how many requests actually happened in the 60 minutes that the spike represents on the graph?

The problem is that many tools use a technique for sampling the data in order to summate the 99th percentile. They collect that data over a time period, such every bit every i infinitesimal. If you have 60 ane-infinitesimal 99th percentile calculations over an hour, and you want to know what the 99th percentile is over the whole hour, there is no mode to calculate that. What this graph does: every bit y'all zoom out and no longer have enough pixels, information technology takes the points that would contain that pixel and just averages them together. And so what y'all're really seeing here is an hourly average of 99% times, which statistically ways nothing.

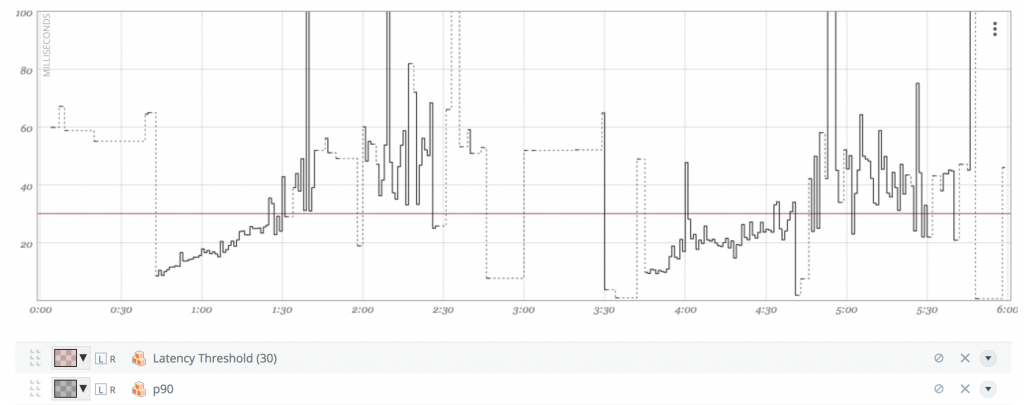

To better illustrate this point, let's take a look at what a latency distribution graph looks like zoomed in, below.

This graph is calculating the 90th percentile over a rolling 1-infinitesimal period, and you can see information technology bounces around quite a bit. Although aggregating percentiles is tempting, it can produce materially incorrect results — particularly if your load is highly volatile.

Percentile metrics do not allow you lot to implement accurate Service Level Objectives that are formulated against hours or weeks. There are a considerable number of operational fourth dimension series data monitoring systems, both open source and commercial, that volition happily store percentiles at five-minute (or similar) intervals. But the percentiles are averaged to fit the number of pixels in the fourth dimension windows on the graph, and that averaged information is mathematically incorrect.

Percentiles are a staple tool of existent systems monitoring, merely their limitations should exist understood. Because percentiles are provided by nearly every monitoring and observability toolset without limitations on their usage, they can be applied to SLO analyses hands without the operator needing to understand the consequences of how they are applied.

Rethinking How to Compute SLO Latency: Histograms

So what's the correct way to compute SLO latencies? Every bit your services get larger and y'all're handling more requests, it gets hard to do the math in real-fourth dimension considering there'due south so much data — and it's not always easy to store. Say you want to summate the 99th percentile and and so decide later, for example, that the 99th percentile wasn't right — the 95th percentile is. Yous have to look at past logs and recalculate everything. And those logs can be expensive, not merely to do the math on but to shop in the first place.

A ameliorate approach than storing percentiles is to store the source sample data in a manner that is more than efficient than storing single samples, simply still able to produce statistically significant aggregates. Enter histograms. Histograms are the about authentic, fast and inexpensive manner to compute SLO latencies. A histogram is a representation of the distribution of a continuous variable, in which the unabridged range of values is divided into a series of intervals (or "bins") and the representation displays how many values fall into each bin. Histograms are ideal for SLO analysis — or any high-frequency, high-volume information — because they allow you to store the complete distribution of data at calibration, rather than store a scattering of quantiles. The beauty of using histogram data is that histograms tin be aggregated over fourth dimension, and they can exist used to summate arbitrary percentiles and inverse percentiles on-demand.

For SLO-related data, most practitioners implement open up source histogram libraries. At that place are many implementations out there, but here at Circonus we utilize the log-linear histogram — specifically OpenHistogram. Information technology provides a mix of storage efficiency and statistical accuracy. Worst-case errors at unmarried-digit sample sizes are 5%, quite a flake better than the hundreds percent seen by averaging percentiles. Some analysts apply approximate histograms, such as t-digest. However, those implementations often exhibit double-digit error rates near median values. With any histogram-based implementation, there will always be some level of error, but implementations such as log-linear tin can generally minimize that fault to well under one%, specially with large numbers of samples.

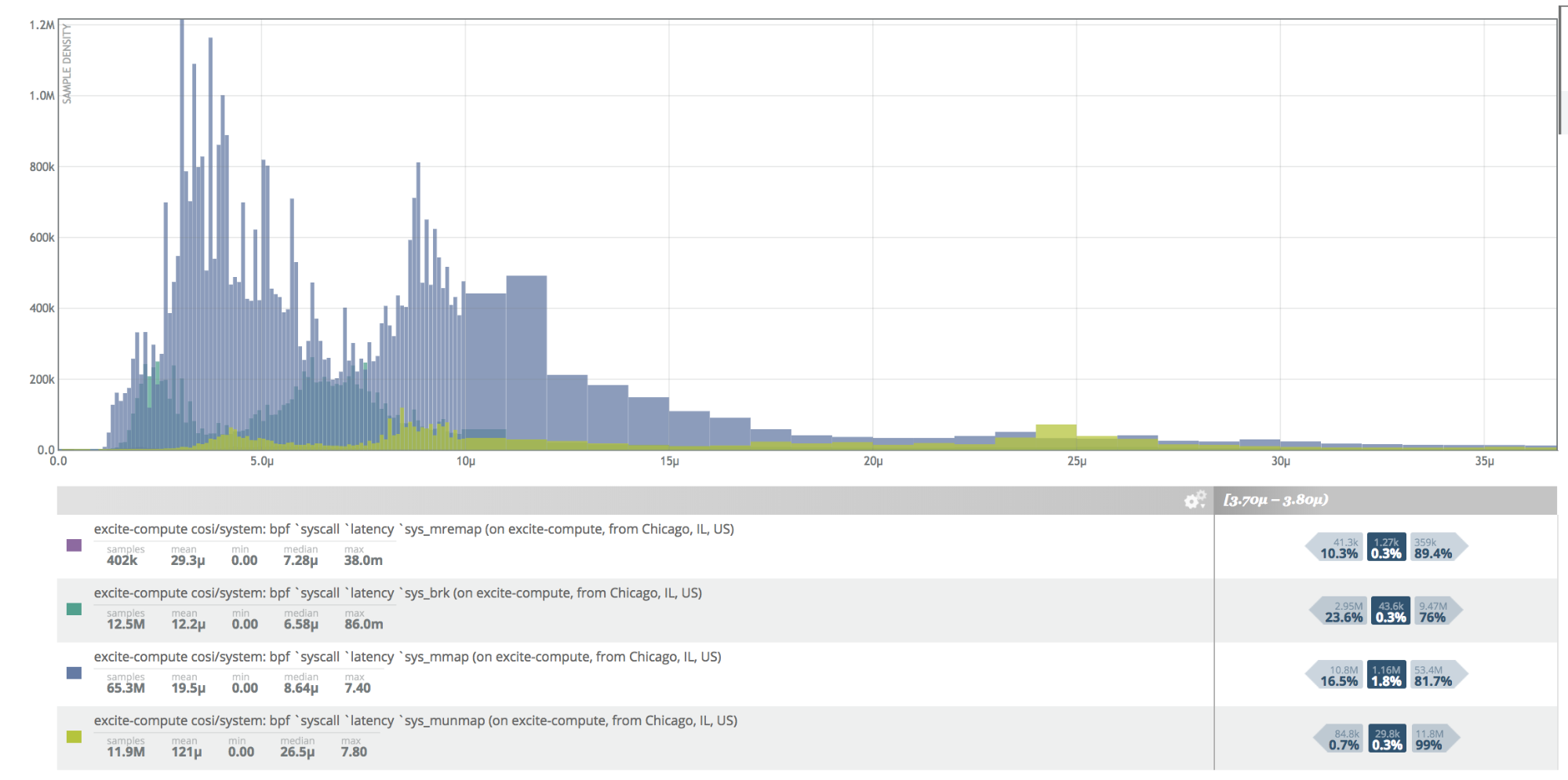

This histogram to a higher place includes every single sample that has come in for a specific latency distribution — half dozen million latency samples in total. It shows, for example, that one one thousand thousand samples autumn within the 370,000 to 380,000-microsecond bin, and that 99% of latency samples are faster than 1.2 1000000 microseconds. Information technology can store all these samples at 600 bytes and accurately calculate percentiles and inverse percentiles while being very inexpensive to shop, analyze and remember.

Let'due south dive deeper into the benefits of histograms and how to employ them to correctly summate SLOs.

Histograms Easily Calculate Capricious Percentiles and Inverse Percentiles

Framing SLOs as percentiles is backward — they must exist framed equally inverse percentiles. For case, when y'all say "99% of home page requests should be faster than 100 milliseconds," the 99th percentile for latency just tells us how slow the experience is for the 99th percentile of users. This is not super helpful. What's more relevant is to know what percentage of users are meeting or exceeding your goal of 100 milliseconds. And when you lot practise the math on that, this is called an changed percentile.

Also, when framing SLOs, there are two times that are disquisitional:

- The period over which you calculate your percentile. Example: 5 minutes.

- The period over which yous calculate your objective success. Example: 28 days.

The reason you need a menses over which to calculate your percentile is that looking at every single asking over 28 days is difficult, especially considering traffic spikes. So what engineers do is look at these requests in reasonably sized windows — five minutes in this case. So you lot'll desire to ensure your habitation page requests are under 100 milliseconds over 28 days, and yous'll look at this in 5-minute windows. You lot'll have 288 5-minute windows for 28 days and look inside each window to ensure that 99% of requests are faster than 100 milliseconds. At the end of 28 days, you'll know how many windows are winners vs. losers and based on that information, make adjustments as needed.

Thus, stating an SLO equally 99% nether 100 milliseconds is incomplete. A right SLO is:

- 99% under 100 milliseconds over any five-minute menstruum AND 99% of those are satisfied in a rolling 28 day period.

The inverse percentile for this SLO is: 99% of home folio requests in the past 28 days served in < 100ms; % requests = count_below(100ms) / total_count * 100; ex: 99.484 percent faster than 100ms

These inverse percentiles are easy to summate with histograms. And a key benefit is that the histogram allows you to summate an arbitrary gear up of percentiles — the median, the 90th, 95th, 99th percentile — subsequently the fact. Having this flexibility comes in handy when yous are still evaluating your service, and are not gear up to commit yourself to a latency threshold just yet.

Histograms Have Bin Boundaries to Ease Analysis

Histograms divide all actual sample data into a series of intervals called bins. Bins let engineers to exercise statistics and reasoning about the behavior of something without looking at every single data indicate. What'south admittedly critical for accurate analysis is that your histograms ensure a high number of bins and that you lot gear up your bins the same across all of your histograms.

There are diverse monitoring solutions available that have histograms, but their number of bins are many times extremely limiting — they can be as depression every bit viii or 10. And with that number of bins, your error margin on calculations is astronomical. For comparison, Circhlist has 43,000 bins. It'due south critical that you have enough bins in the latency range that are relevant for your percentiles (east.g. 5%). In this mode, you tin guarantee 5% accuracy on all percentiles, no matter how the data is distributed.

It's important that you set your bin boundary to the bodily question you lot're answering. So in our example, if you want requests to be faster than 100 milliseconds, make sure one of your bin boundaries is on a hundred milliseconds. This way, you can actually accurately answer the inverse percentile question of 100 milliseconds, because the histogram is counting everything and it'southward counting everything less than 100 milliseconds.

Also, your histograms should have common bin boundaries, so that you can hands add all of your histograms together. For example, you can take all histograms from this minute and last minute and add together them up into a minute histogram — similarly for hours, days, months. And when you want to know what your 99% was for final month, you can immediately get your answer. In fact, information technology's usually a good idea to mandate the bin boundaries for your whole arrangement, otherwise, you will not be able to aggregate histograms from different services.

Histograms Let You Iterate SLOs Continuously

As a reminder, SLOs should be the lowest possible objective — information technology's the minimum your users look from you — so that you can take more risks. If you lot fix your objective to 99, but nobody notices a problem until you striking 95, then your SLO is too loftier, costing you unnecessary fourth dimension and money. But if yous set information technology at 95, then you have more than budget and room to take risks. Thus, it'due south of import to set up SLOs at the minimum feasible, and then you lot can upkeep effectually it. There are big differences between 99.5% and 99.2% and 100 vs 115 milliseconds.

But how practise you know what number this should be to start? The answer is yous don't. It'south impossible to become it right the showtime time. You must continue testing and measuring. By keeping historical accuracy with high granularity via histograms, you tin can iteratively optimize your parameters. You will be able to expect at all of your data together and quantitatively circle in on a good number. You can look at the last 2 or three months of data to identify at what threshold you begin to see a negative impact downstream.

For instance, the above histogram includes 10 million latency samples, stored every five minutes. This shows how the behavior of your system changes over fourth dimension and tin assist to inform you on how to set your SLO thresholds. Y'all can identify, for example, that yous failed your SLO of 99% less than 100 milliseconds and were serving requests at 300 milliseconds for an unabridged day — but the downstream consumers of your service never noticed. This is an indication that you are overachieving, which you should not exercise with SLOs.

Your software and your consumers are e'er changing, and that's why setting and measuring your SLOs must be an iterative process. We've seen companies spend thousands of dollars and waste pregnant resources trying to achieve an objective that was entirely unnecessary because they didn't iteratively get back and do the math to make sure that their objectives were gear up reasonably. So, be sure to respect the accuracy and mathematics around how y'all calculate your SLOs — considering they almost directly feed back into budgets. And you can go a bigger upkeep by setting your expectations every bit low as possible, while all the same achieving the optimal outcome.

Respect the Math

When you set up an SLO, yous're making a promise to the rest of the arrangement; and typically there are hiring, resource, capital letter, and operational expense decisions built around that. This is why information technology'south absolutely critical to frame and calculate your SLOs correctly. Auditable, measurable data is the cornerstone of setting and coming together your SLOs; and while this is complicated, it is absolutely worth the effort to get right. Retrieve, never aggregate percentiles. Log-linear histograms are the most efficient style to accurately compute latency SLOs, allowing you to arbitrarily calculate inverse percentiles, iterate over time, and rapidly and inexpensively go back to your information at whatsoever fourth dimension to answer any question.

Feature image via Pixabay.

Source: https://thenewstack.io/how-to-correctly-frame-and-calculate-latency-slos/

0 Response to "Everything You Know About Latency Is Wrong"

Postar um comentário